Fact Verification with Good and Grounded Generation (G3)

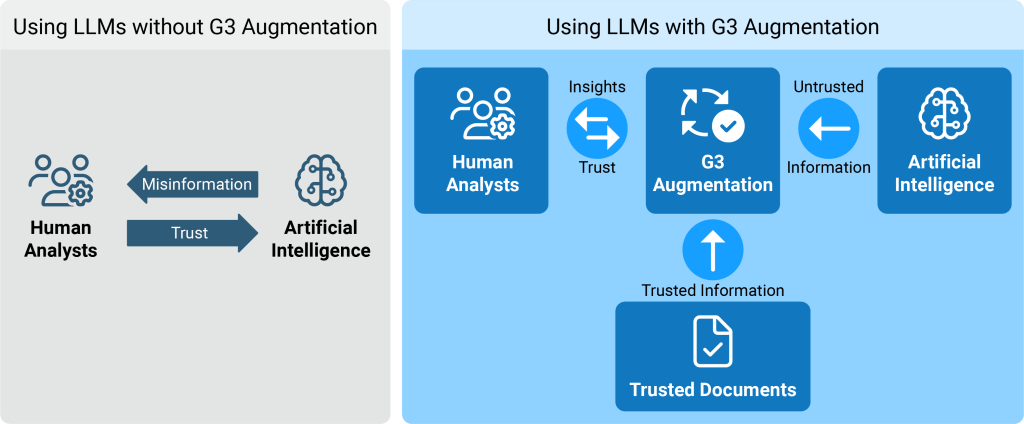

Noblis is building responsible and explainable artificial intelligence (AI) solutions that enable trust and transparency in state-of-the-art and emerging technologies. Good and Grounded Generation (G3) is an emerging Noblis approach to counter the threat of misinformation generated by large language models (LLMs) by malicious or unwitting actors. Through semantic mapping and logical validation, G3 empowers a fact-based review of generated outputs.

The Challenge

Recent innovations in natural language generation (NLG) technology have produced LLMs that excel at generating humanlike text. These systems have the potential to reframe authorship as a collaborative process between human and machine. Through rapidly processing large quantities of information and providing a natural language interface for probing this data, LLMs could drastically increase efficiencies associated with human-driven data investigation and authorship. However, LLMs usually lack explainability guardrails and can produce convincing misinformation, making this exciting technology also fraught with danger, especially for missions where truth is non-negotiable.

Our Solution

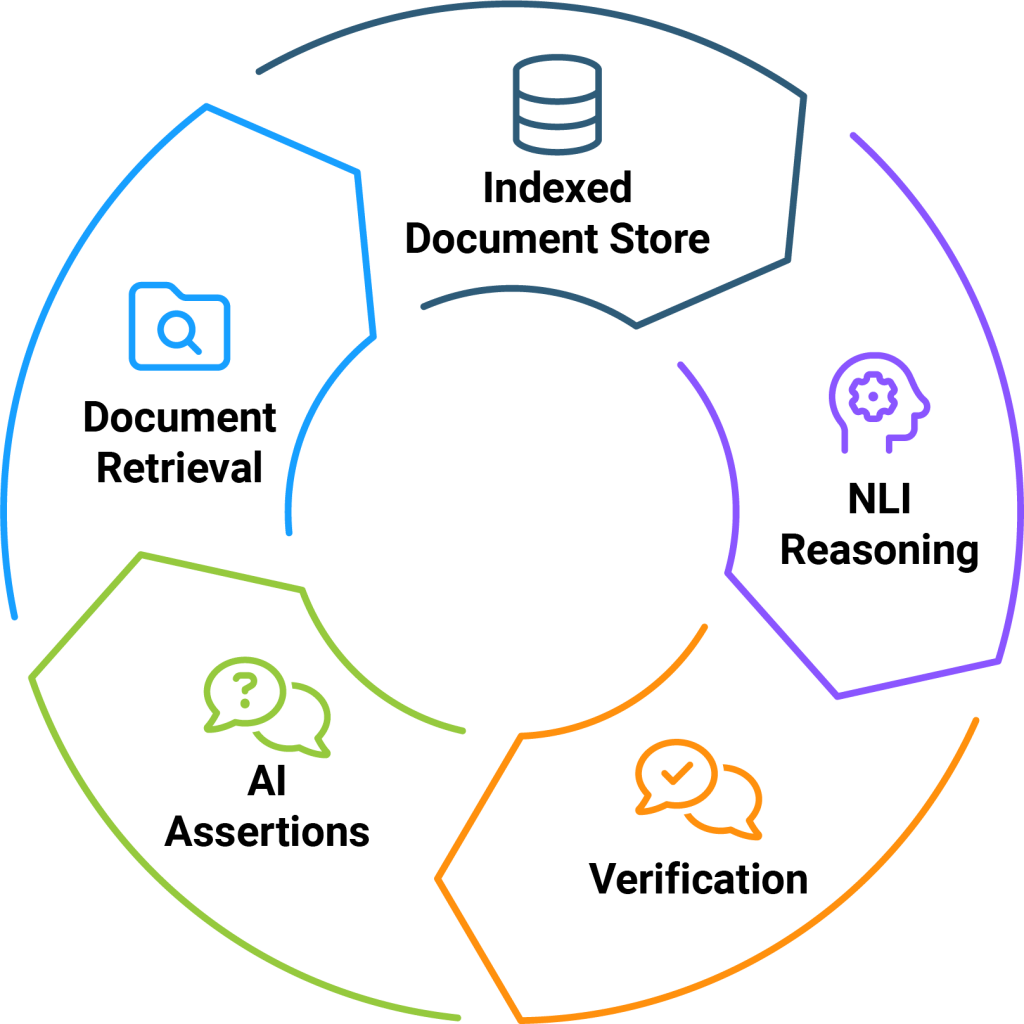

Noblis believes that the threats posed by LLMs may be mitigated by the careful regard of established ground truth information. Our G3 approach explores the potential for automated factual verification of LLM outputs (figure 1). Our fact-checking pipeline consists of two main components: 1) fact extraction, which increases the semantic granularity of a document, and 2) fact verification, which allows extracted facts to be scrutinized for logical consistency with a known ground truth. We model ground truth information using an indexed vector database, which allows for quick document retrieval.

We conduct fact extraction through open information extraction (open IE) that allows us to pull out the granular assertions in a text. We perform extraction for both trusted ground truth documents and for LLM-generated information. After extraction, we register the ground truth facts into a database for later reference during verification.

Once facts have been enumerated, we compare LLM-generated facts to those in our trusted ground truth database. Using natural language inference (NLI), we detect if the generated facts entail, contradict, or are ungrounded with respect to the trusted ground truth repository. Through an indexed search and document filtration (figure 2), we can quickly find the most relevant information for comparison from potentially millions of documents in the ground truth repository. This verification step overcomes some of the weaknesses of a no-ontology approach to IE by using natural language understanding technology to decipher fuzzy entity descriptions and relations.

Visualization of the document retrieval and fact validation solution components:

Use Cases

As a fact-checking solution, G3 offers unique control over LLM-generated text. Following are some potential use cases for this technology:

- By embedding G3 into user workflows, human analysts could gain remarkable augmentation for validating LLM-generated text against a large body of trusted ground truth.

- In fully automated environments, G3 could be used to decide without human intervention if a generated text is sufficiently truthful based on the percentage of the asserted facts in the text that contain no errors.

- No knowledge of or access into the LLM is required, as G3 can be applied to any model in a black-box manner.

- Aside from LLM applications, G3 can also be applied to human-generated output for uses such as misinformation detection.

- In the case of classified or controlled information, G3 could be implemented in a way that allows for separate validation of LLM-generated outputs against differently classified data stores without cross-contamination.

Contact our experts at answers@noblis.org to learn more about G3.

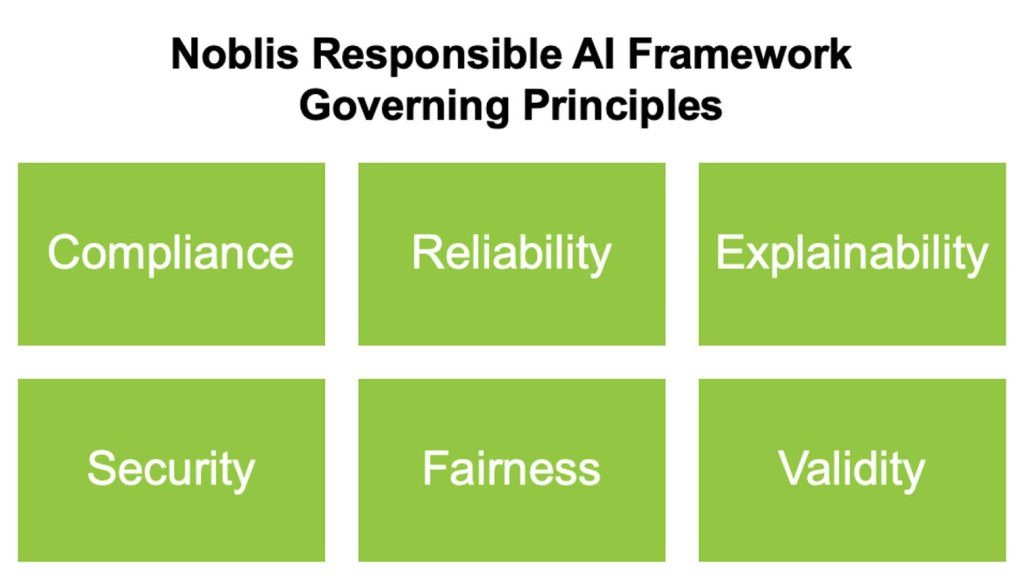

More to Explore: The Noblis Framework for Responsible AI

Our G3 technology addresses issues of trustworthiness and explainability, which are two related and very active areas of scientific research by Noblis and the broader AI community that must be present and built into the development cycle to spur widespread adoption of AI capabilities. To encourage the development of risk-aware AI systems that are responsible, trustworthy, and explainable, Noblis has developed a Responsible Artificial Intelligence (AI) governance framework (figure 3) that consists of three elements: a set of Responsible AI Principles, a System Development Life Cycle and an AI System Review Process, which collectively aim to:

- Ensure compliance with all current ethical standards, laws and regulations associated with the development of AI systems;

- Provide clear instructions to organizations engaged in the design, development and deployment of AI systems in connection with support to a government client and

- Articulate a governance process pursuant to which supported and supporting organizations build trust in the AI systems.